Section: New Results

Interaction techniques

Participants : Géry Casiez, Fanny Chevalier, Stéphane Huot, Sylvain Malacria, Justin Mathew, Thomas Pietrzak, Nicolas Roussel.

Interaction in 3D environments

In virtual environments, interacting directly with our hands and fingers greatly contributes to the sense of immersion, especially when force feedback is provided for simulating the touch of virtual objects. Yet, common haptic interfaces are unfit for multi-finger manipulation and only costly and cumbersome grounded exoskeletons do provide all the efforts expected from object manipulation. To make multi-finger haptic interaction more accessible, we propose to combine two affordable haptic interfaces into a bimanual setup named DesktopGlove [18]. With this approach, each hand is in charge of different components of object manipulation: one commands the global motion of a virtual hand while the other controls its fingers for grasping. In addition, each hand is subjected to forces that relate to its own degrees of freedom so that users perceive a variety of haptic effects through both of them. Our results show that (1) users are able to integrate the separated degrees of freedom of DesktopGlove to efficiently control a virtual hand in a posing task, (2) DesktopGlove shows overall better performance than a traditional data glove and is preferred by users, and (3) users considered the separated haptic feedback realistic and accurate for manipulating objects in virtual environments.

We also investigated how head movements can serve to change the viewpoint in 3D applications, especially when the viewpoint needs to be changed quickly and temporarily to disambiguate the view. We studied how to use yaw and roll head movements to perform orbital camera control, i.e., to rotate the camera around a specific point in the scene [33]. We reported on four user studies. Study 1 evaluated the useful resolution of head movements and study 2 informed about visual and physical comfort. Study 3 compared two interaction techniques, designed by taking into account the results of the two previous studies. Results show that head roll is more efficient than head yaw for orbital camera control when interacting with a screen. Finally, Study 4 compared head roll with a standard technique relying on the mouse and the keyboard. Moreover, users were allowed to use both techniques at their convenience in a second stage. Results show that users prefer and are faster (14.5%) with the head control technique.

Storyboard sketching for stereo 3D films and Virtual Reality stories

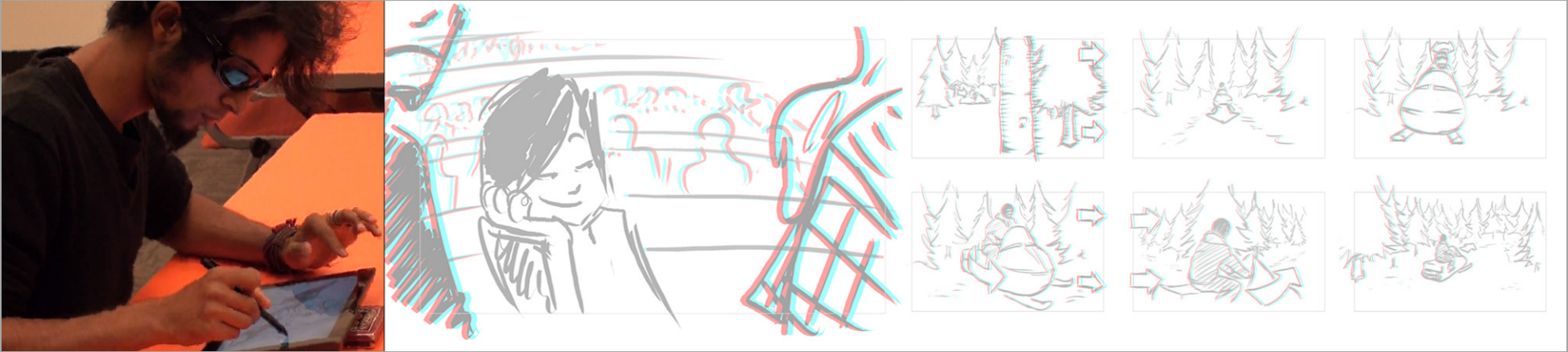

The resurgence of stereoscopic and Virtual Reality (VR) media has motivated filmmakers to evolve new stereo- and VR-cinematic vocabularies, as many principles for stereo 3D film and VR story are unique. Concepts like plane separation, parallax position, and depth budgets in stereo, and presence, active experience, blocking and stitching in VR are missing from early planning due to the 2D nature of existing storyboards. Motivated to foresee difficulties exclusive to stereoscopy and VR, but also to exploit the unique possibilities of these medium, the 3D and VR cinematography communities encourages filmmakers to start thinking in stereo/VR as early as possible. Yet, there are very few early stage tools to support the ideation and discussion of a stereoscopic film or a VR story. Traditional solutions for early visual development and design, in current practices, are either strictly 2D or require 3D modeling skills, producing content that is consumed passively by the creative team.

To fill the gap in the filmmakers' toolkit, we proposed Storeoboard [31], a system for stereo-cinematic conceptualization, via storyboard sketching directly in stereo (Figure 6); and a novel multi-device system supporting the planning of virtual reality stories. Our tools are the first of their kind, allowing filmmakers to explore, experiment and conceptualize ideas in stereo or VR early in the film pipeline, develop new stereo- and VR-cinematic constructs and foresee potential difficulties. Our solutions are the design outcome of interviews and field work with directors, stereographers, storyboard artists and VR professionals. Our core contributions are thus: 1) a principled approach to the design and development of the first stereoscopic storyboard system that allows the director and artists to explore both the stereoscopic space and concepts in real-time, addressing key HCI challenges tied to sketching in stereoscopy; and 2) a principled survey of the state of the art in cinematic VR planning to design the first multi-device system that supports a storyboard workflow for VR film. We evaluated our tools with focus group and individual user studies with storyboard artists and industry professionals. In [31], we also report on feedback from the director of a live action, feature film on which Storeoboard was deployed. Results suggest that our approaches provide the speed and functionality needed for early stage planning, and the artifacts to properly discuss steroscopic and VR films.

|

Tactile displays and vibrotactile feedback

Tactile displays have predominantly been used for information transfer using patterns or as assistive feedback for interactions. With recent advances in hardware for conveying increasingly rich tactile information that mirrors visual information, and the increasing viability of wearables that remain in constant contact with the skin, there is a compelling argument for exploring tactile interactions as rich as visual displays. As Direct Manipulation underlies much of the advances in visual interactions, we introduced Direct Manipulation-enabled Tactile display [29]. We defined the concepts of a tactile screen, tactile pixel, tactile pointer, and tactile target which enable tactile pointing, selection and drag & drop. We built a proof of concept tactile display and studied its precision limits. We further developed a performance model for DMTs based on a tactile target acquisition study, and studied user performance in a real-world DMT menu application. The results show that users are able to use the application with relative ease and speed.

We have also explored vibrotactile feedback with wearable devices such as smartwatches and activity trackers, which are becoming prevalent. These devices provide continuous information about health and fitness, and offer personalized progress monitoring, often through multimodal feedback with embedded visual, audio, and vibrotactile displays. Vibrations are particularly useful when providing discreet feedback, without users having to look at a display or anyone else noticing, thus preserving the flow of the primary activity. Yet, current use of vibrations is limited to basic patterns, since representing more complex information with a single actuator is challenging. Moreover, it is unclear how much the user’s current physical activity may interfere with their understanding of the vibrations. We addressed both issues through the design and evaluation of ActiVibe, a set of vibrotactile icons designed to represent progress through the values 1 to 10 [24]. We demonstrate a recognition rate of over 96% in a laboratory setting using a commercial smartwatch. ActiVibe was also evaluated in situ with 22 participants for a 28-day period. We show that the recognition rate is 88.7% in the wild and give a list of factors that affect the recognition, as well as provide design guidelines for communicating progress via vibrations.

Force-based autoscroll

Autoscroll, also known as edge-scrolling, is a common interaction technique in graphical interfaces that allows users to scroll a viewport while in dragging mode: once in dragging mode, the user moves the pointer near the viewport's edge to trigger an “automatic” scrolling. In spite of their wide use, existing autoscroll methods suffer from several limitations [45]. First, most autoscroll methods over-rely on the size of the control area, that is, the larger it is, the faster scrolling rate can be. Therefore, the level of control depends on the available distance between the viewport and the edge of the display, which can be limited. This is for example the case with small displays or when the view is maximized. Second, depending on the task, the users' intention can be ambiguous (e.g. dragging and dropping a file is ambiguous as the user's target may be located within the initial viewport or in a different one on the same display). To reduce this ambiguity, the size of the control area is drastically smaller for drag-and-drop operations which consequently also affects scrolling rate control as the user has a limited input area to control the scrolling speed.

We explored how force-sensing input, which is now available on commercial devices such as the Apple Magic Trackpad 2 or iPhone 6S, can be used to overcome the limitations of autoscroll. Indeed, force-sensing is an interesting candidate because: 1) users are often already applying a (relatively soft) force on the input device when using autoscroll and 2) varying force on the input device does not require to move the pointer, thus making it possible to offer control to the user while using a small and consistent control area regardless of the task and the device. We designed and proposed ForceEdge, a novel interaction technique mapping the force applied on a trackpad to the autoscrolling rate [19]. We implemented a software interface that can be used to design different transfer functions that map the force to autoscrolling rate and test these mappings for text selection and drag-and-drop tasks. Our pilot studies showed encouraging results and future work will focus on conducting more robust evaluations, as well as testing ForceEdge on mobile devices.

Combined Brain and gaze inputs for target selection

Gaze-based interfaces and Brain-Computer Interfaces (BCIs) allow for hands-free human–computer interaction. We investigated the combination of gaze and BCIs and proposed a novel selection technique for 2D target acquisition based on input fusion. This new approach combines the probabilistic models for each input, in order to better estimate the intent of the user. We evaluated its performance against the existing gaze and brain–computer interaction techniques. Twelve participants took part in our study, in which they had to search and select 2D targets with each of the evaluated techniques. Our fusion-based hybrid interaction technique was found to be more reliable than the previous gaze and BCI hybrid interaction techniques for 10 participants over 12, while being 29% faster on average. However, similarly to what has been observed in hybrid gaze-and-speech interaction, gaze-only interaction technique still provides the best performance. Our results should encourage the use of input fusion, as opposed to sequential interaction, in order to design better hybrid interfaces [14].

Actuated desktop devices

Desktop workstation remains the most common setup for office work tasks such as text editing, CAD, data analysis or programming. While several studies investigated how users interact with their devices (e.g. pressing keyboard keys, moving the cursor, etc.), it is not clear how they arrange their devices on the desk and whether we can leverage existing users’ behaviors.

We designed the LivingDesktop [22], an augmented desktop with devices capable of moving autonomously. The LivingDesktop can control the position and orientation of the mouse, keyboard and monitors, offering different degrees of control for both the system (autonomous, semi-autonomous) and the user (manual, semi-manual) as well as different perceptive qualities (visual, haptic) thanks to a large range of device motions. We implemented a proof-of-concept of the LivingDesktop combining rail, robotic base and magnetism to control the position and orientation of the devices. This new setup presents several interesting features: (1) it improves ergonomics by continuously adjusting the position of its devices to help users adopting ergonomic postures and avoiding static postures for extended periods; (2) it facilitates collaborative works between local (e.g. located in the office) and remote co-workers; (3) it leverages context by reacting to the position of the user in the office, the presence of physical objects (e.g. tablets, food) or users’ current activity in order to maintain a high level of comfort; (4) it reinforces physicality within the desktop workstation to increase immersion.

We conducted a scenario evaluation of the LivingDesktop. Our results showed the perceived usefulness of collaborative and ergonomics applications, as well as how it inspired our participants to elaborate novel application scenario, including social communication or accessibility.

Latency compensation

Human-computer interactions are greatly affected by the latency between the human input and the system visual response and the compensation of this latency is an important problem for the HCI community. We have developed a simple forecasting algorithm for latency compensation in indirect interaction using a mouse, based on numerical differentiation. Several differentiators were compared, including a novel algebraic version, and an optimized procedure was developed for tuning the parameters of the algorithm. The efficiency was demonstrated on real data, measured with a 1ms sampling time. These results are developed in [37] and patent has been filed on a subsequent technique for latency compensation [42].